Zulip Chat Archive

Stream: Machine Learning for Theorem Proving

Topic: GPT-f paper

Stanislas Polu (Sep 09 2020 at 08:51):

Hi! We (OpenAI) just released a paper describing GPT-f, a Transformer-based automated theorem prover. It covers a lot of work we've been doing with Metamath ![]() and that could be applied to Lean.

and that could be applied to Lean.

Full abstract:

We explore the application of transformer-based language models to automated theorem proving. This work is motivated by the possibility that a major limitation of automated theorem provers compared to humans – the generation of original mathematical terms – might be addressable via generation from language models. We present an automated prover and proof assistant, GPT-f, for the Metamath formalization language, and analyze its performance. GPT-f found new short proofs that were accepted into the main Metamath library, which is to our knowledge, the first time a deep learning based system has contributed proofs that were adopted by a formal mathematics community.

arXiv link: https://arxiv.org/abs/2009.03393

Stanislas Polu (Sep 09 2020 at 08:52):

Any feedback/suggestions or questions are obviously welcome. I also hope to meet some of you at AITP next week to discuss it!

Johan Commelin (Sep 09 2020 at 08:55):

Nice! I'll try to read it soon

Johan Commelin (Sep 09 2020 at 08:55):

Abstract looks promising!

Jason Rute (Sep 09 2020 at 15:12):

I also look forward to reading it. (I’m on honeymoon this week, so don’t expect much of a response soon.)

Jason Rute (Sep 09 2020 at 15:13):

However, one quick question: Are you working on applying it to Lean, or is that an exercise for the reader?

Patrick Massot (Sep 09 2020 at 15:31):

Oh great, I remember you had to postpone your wedding because of Covid, but it looks like it happened in the end, congratulations!

Johan Commelin (Sep 09 2020 at 16:47):

@Stanislas Polu I haven't read through everything in detail, because I don't know enough about ML. But I'm very impressed by the fact that GPT-f found several shorter proofs than those that were in set.mm at the time.

Stanislas Polu (Sep 09 2020 at 18:18):

@Jason Rute we're still at an exploratory stage, but short answer is yes, definitely!

Stanislas Polu (Sep 09 2020 at 18:21):

@Johan Commelin Thank you! (I have to admit we were ourselves super excited by this result as well :))

Jesse Michael Han (Sep 09 2020 at 20:02):

during proof search, is the model conditioned on the proof tree / previously expanded goals?

Stanislas Polu (Sep 10 2020 at 06:54):

No it's not due to the size of the expressions involved being already quite large. That being said, we experimented with conditioning on the top goal and were not able to demonstrate a huge lift. But this was very exploratory so I wouldn't bet my money on it.

Wojciech Nawrocki (Sep 11 2020 at 00:26):

This is impressive! And it makes me quite excited for what the future holds. Two small corrections if you care about that sort of thing:

Pg. 8, the ring footnote points to the class rather than the tactic

Table 3: Matmath -> Metamath?

Stanislas Polu (Sep 11 2020 at 09:43):

Thanks a lot @Wojciech Nawrocki for the kind words as well as the feedback. Duly noted and factored in the next version of the paper :+1: Thanks!

Jason Rute (Sep 13 2020 at 21:43):

@Stanislas Polu What is considered a valid proof step? GPT-f will return both a theorem and substitutions, which then must unify with the goal. If the substitutions don't unify, then I'm sure it is marked invalid. However, what if the theorem isn't in the list of previously proved theorems? What does GPT-f do?

- Try to prove that theorem,

- consider this an invalid proof step, or

- restrict the output to only known theorems?

(I assume it is the first option, but I want to check.)

Stanislas Polu (Sep 14 2020 at 07:52):

@Jason Rute Good questions!

- If the unification fails, the kernel rejects the proof step and it is not even considered in the proof tree search (not added to the tree or queue, nor valued by the value function).

- If the theorem statement generated is not in the theorem database, currently and in the experiments reported in the paper, the kernel rejects it as well. That being said we're experimenting with letting the model prove such conjectures if they are considered interesting by the value function. In that case we simply add the theorem itself as a subgoal (with a special tag to make sure we re-check the distinct variables once a proof is found (DVs are a metamath technicality that is ok to abstract in your thinking and revisit later if you don't know how they work)) and the subgoal is valued and added to the queue accordingly.

Jason Rute (Sep 14 2020 at 13:25):

@Stanislas Polu Another follow up question: When validating or testing the model, do you have any kind of dependency restriction on theorems? For example, in most of these papers, to prove 0 = 0 (if it is in the test set), one must use theorems which came before 0 = 0. I believe the Holophrasm and MetaGen papers do this. The MetaGen paper calls these "background theorems". (This is not perfect however for tactic based provers, since 0 = 0 might be provable with a tactic which came along after the proof of 0=0. I have more thoughts on better ways to do this, but that is for another time.) Does your paper do this? I ask, because without a restriction like this, you could have a much higher percentage of theorems solved.

Stanislas Polu (Sep 14 2020 at 13:26):

Yep this is mentioned in the paper (I believe?). We enforce theorem ordering as we evaluate on set.mm :+1:

Jason Rute (Sep 14 2020 at 13:37):

Sorry. I had trouble finding it. I see it now, on the middle of page 4. Thanks!

Stanislas Polu (Sep 14 2020 at 13:55):

(FWIW the lift you generally get from waiving that constraint is 2-3% success rate)

Jason Rute (Sep 15 2020 at 03:21):

I should mention that there are a few threads talking about GPT-f on the Internet besides this one:

- Twitter: https://twitter.com/spolu/status/1303578985276887042

- Reddit: GPT-f: a new SOTA for automated mathematical proofs : MachineLearning

- MetaMath Google Group: GPT-f paper

Posted my first paper on arXiv:boom::raised_hands: GPT-f is a Transformer-based automated theorem prover. We show that Transformer + Search is suitable to formal reasoning and continuous self-improvement 🦾 https://arxiv.org/abs/2009.03393 https://twitter.com/spolu/status/1303578985276887042/photo/1

- Stanislas Polu (@spolu)Jason Rute (Sep 15 2020 at 03:21):

As usual, I’m going to try to summarize the paper for an audience who knows more about theorem provers than neural networks.

Jason Rute (Sep 15 2020 at 03:22):

In many ways GPT-f is similar to other theorem provers which have come before, HOList/DeepMath, CoqGym/ASTTactic, TacticToe, etc. What all of these have in common is that they treat theorem proving as a tree search. What has been known for a long time is that one can avoid combinatorial explosion in tree (and graph) search by adopting smart heuristics. What AlphaGo and its successors has taught us is that these heuristics can be entirely learned either from examples or from bootstrapping and reinforcement learning. GPT-f is no different in this regard. (I’m not going to say much more about the specific tree search algorithm used by GPT-f, since I don’t think their approach is especially optimized more than any other similar paper.)

Jason Rute (Sep 15 2020 at 03:22):

Currently GPT-f is a system for MetaMath, but that is just a design choice. The MetaMath system is embarrassingly simple, but at the same time user-friendly enough to have developed a large library. That makes it a good candidate for a first experiment. Also, as we will see, GPT-f has no problem handling the most difficult part about MetaMath automation.

Jason Rute (Sep 15 2020 at 03:22):

From a certain point of view, one can think of MetaMath as a tactic-based prover with only one tactic. If one reads a MetaMath proof backward, at every point there is one or more goals, say ( 3 + 2 ) = 5. One can then apply a previously proved theorem, for example transitivity: { A = B, B = C } |- A = C and specify how to substitute the free variables. After substitution, the conclusion must equal the goal. Therefore, in this example the substitutions for A and C must be A = ( 3 + 2 ) and C = 5, while B could be anything. The trick, of course, is to substitute something useful for B. If you choose B = 4 + 1 , then after applying this theorem (backwards), one gets a new goal for each hypothesis: ( 3 + 2 ) = ( 4 + 1 ) and ( 4 + 1 ) = 5. The latter happens to be a theorem (the definition of 5 in MetaMath), which would close that particular goal.

Jason Rute (Sep 15 2020 at 03:22):

In most of the work applying deep learning to (tactic-based) theorem proving, there are four main tasks:

- tactic selection: Given a goal, find the best tactic. The nice thing about tactic selection is that there is a fixed list of tactics. Choosing the best thing from a fixed list is easy for deep learning systems. For MetaMath, it is trivial, since there is only one tactic.

- premise/lemma selection: Given a goal (and a tactic), find the best theorem to apply (assuming the tactic takes one or more theorems as parameters, e.g. a rewrite tactic). There are multiple ways to do this. Many systems assign each theorem a vector, a key, and assign the goal another vector, a query, and try to find the theorem(s) whose keys most closely match the query. Other systems, try to find the goal most similar in the training data and use whatever tactic and premises that goal used. GPT-f takes a unique approach here as we will see.

- parameter selection: Besides any theorems, there are often other parameters that need to be selected for a tactic as well. HOList handles this by avoiding tactics with such parameters (or filling in the parameters with constant values). CoqGym has a fixed, limited grammar from which those parameters can be taken. Other provers allow using local variables and subterms. Still others find a similar example in the training data and use those parameters. For MetaMath, the choice of parameters is especially important and not at all trivial. Previous Metamath AIs (Holophrasm and MetaGen) both use recurrent neural networks to guess at the substitution. This is where GPT-f will shine, since transformers are especially good at “making stuff up”.

- value estimation: Finally, to make the tree search more efficient, usually a score is applied to each goal to say how “provable” it is.

Jason Rute (Sep 15 2020 at 03:23):

One comment on the above four steps is that the first three are called a policy and can be done together or separately. Also, in, say, Lean, it is not uncommon to see tactics like apply (lem p) which don’t fit cleanly into the above paradigm. The “premise” is technically lem p but morally the premise is lem and one modifies lem by instantiating the universal quantifier with the term p. GPT-f (if applied to Lean) would show a lot of promise for handling situations like this as well.

Jason Rute (Sep 15 2020 at 03:23):

GPT-f is based on a transformer architecture. (See my notes on transformers here.) Without getting into the details, it basically is a (really good!) text generator. You give it a prompt and it completes the prompt. In this case, the system was trained to take prompts like this:

GOAL [[]] |- (3 + 2) = 5 PROOFSTEP

and then complete that prompt with something like the following (except without the new lines which I added for readability):

GOAL [[]] |- ( 3 + 2 ) = 5

PROOFSTEP

[[ |- A = B |- C = B ]] |- A = C

{{ A : ( 3 + 2 ) }}

{{ B : ( 4 + 1 ) }}

{{ C : 5 }}

<|endoftext|>

Jason Rute (Sep 15 2020 at 03:23):

The way the text is generated allows for generating multiple stochastic completions to the prompt. Each completion is scored and checked by MetaMath to see if it is a valid proof step. If so, it is plugged into the tree search. (The value function is generated similarly, but trained via reinforcement learning similar to HOList/DeepMath. See the GPT-f paper for details.)

Jason Rute (Sep 15 2020 at 03:23):

What separates this paper from other similar works is a few small but important details. Whereas other models might design a custom machine learning architecture for the exact logic at hand, transformers take the viewpoint: “As long as its text, I can handle it.”

Jason Rute (Sep 15 2020 at 03:24):

There have been a number of papers recently showing that transformers can mimic logical reasoning. (The most famous is the paper showing that transformers can solve integrals.) I hope it is very clear to any remaining skeptics that if we are willing to pay for the computer power (more on that in a second…), then we basically have a general purpose tool which can begin to mimic advanced human reasoning on novel tasks. I’m not saying it can come up with a proof of a Millennium Problem, but it can solve straightforward proofs in whatever ITP you want. There is nothing special here about tactic-mode proofs vs term mode vs Isar-style vs first-order logic. In the end, they can all be implemented by a tree search guided by a (transformer) neural network. The only thing stopping us is (1) engineering and (2) computer power.

Jason Rute (Sep 15 2020 at 03:24):

Getting back to the “it’s just text” theme, probably the most surprising thing about this paper is the way the model was pre-trained. GPT-style transformer models are most famously known for generating fake realistic text, be it a screen play or question answering. It is well known that to achieve the best results one must pre-train the model on a large corpus of text, usually public domain books and websites. GPT-f is no different. The model improved by 8 percentage points when pre-trained on such information. It even did a few points better when trained on more mathematical text, including arXiv, GitHub, and Math StackExchange. (I have so many questions about what the model is getting out of these datasets. It is just about recognizing math terminology and parentheses matching, or is it somehow memorizing common proofs?)

Jason Rute (Sep 15 2020 at 03:24):

Another thing which is fascinating about GPT-style transformers is that they don’t use a fixed vocabulary. This can really be a problem for other models. What if a user uses a new definition, or just picks a unique variable name? GPT uses something called byte pair encoding which scans the training data for common groupings of symbols. Those then become the tokens. A common word may be its own token, but an uncommon word may be made of multiple tokens, possibly just a token for each letter. This allows any new words at test time.

Jason Rute (Sep 15 2020 at 03:25):

Now, as for MetaMath, the transformer architecture provides a number of interesting possibilities. First, notice that when the transformer returned the theorem to apply, it didn’t call it by name or look it up from a list of theorems. It called it by the statement of the theorem. To be clear, this is a design choice, but it is a really interesting one. There are two related ways to look at this: (1) the transformer has memorized the theorems in the dataset. (2) the transformer says “hey, what I really need here is a theorem of the form …”. Of course, it is probably a little of both cases. However, the second case leads to the possibility of conjecturing. If the “theorem” isn’t in the dataset, then one could set out to prove it anyway. (As @Stanislas Polu said above, they don’t do this yet.)

Jason Rute (Sep 15 2020 at 03:25):

The second interesting thing is that unlike a lot of similar systems, the transformer has little or no problem filling in the variable substitutions. It can just “guess” a substitution. Of course it may not be useful or even type check, but MetaMath can check that the proof step is valid and the tree search will test it for usefulness. @Christian Szegedy has also said he thinks that GPT-style text generation is also a promising best path forward when a theorem prover needs to come up with new ideas.

Jason Rute (Sep 15 2020 at 03:26):

Before I get into the negatives, I want to really commend @Stanislas Polu and team on making this not only into a paper, but into a usable tool that the MetaMath community can use. I think this back-and-forth interaction with the community is what is going to drive AI for ITPs forward.

Jason Rute (Sep 15 2020 at 03:26):

Ok, now for the negatives. Basically, this is a great experiment and I’m glad OpenAI is fitting the bill for this system, but to be clear this is a quite expensive project. It shows what is possible, but it probably isn’t scalable to the average MetaMath user level right now. I doubt any of us could build a comparable system without the backing of a large research lab like Google, Facebook, OpenAI, or DeepMind.

Jason Rute (Sep 15 2020 at 03:26):

It’s well known that transformers are computationally expensive. They require O(n^2) computations to compute a sequence of length n (including the prompt). They also have more parameters that many other neural network types.

Jason Rute (Sep 15 2020 at 03:27):

To run the larger model once over the training data required 20,000 GPU-hours on a V100. Contrast this with the first HOList/DeepHOL paper. While the DeepHOL model took a lot of iterations to train via reinforcement learning (eight V100 GPUs running for an unspecified amount of time), the trained version is something I could run on my Macbook Air. When doing the “reinforcement learning”, the GPT-f model is only iterated twice since it is so expensive to run, compared to the thousands of iterations used by HOList/DeepHOL.

Jason Rute (Sep 15 2020 at 03:27):

To put it in dollars, a V100 GPU-hour costs on the order of $1 per GPU-hour, so this is $20,000 to run an already trained model once across the training data. I’m very curious what their MetaMath proof assistant web-tool is costing OpenAI.

Jason Rute (Sep 15 2020 at 03:27):

Nonetheless, there is a lot of room for optimization. I think I’ve seen five or so papers in the last few months suggesting how to make transformers behave closer to O(n).

Jason Rute (Sep 15 2020 at 03:28):

Also, I’m still of the opinion that since formulas have so much built-in structure, that using some of that structure as an inductive bias is still valuable. It’s been shown (more than once) that transformers trained with tree-based position encodings do much better at symbolic reasoning tasks. However, I also realize that such encodings would limit the pre-training options. I recall N2Formal (@Christian Szegedy) suggesting ideas for pre-training which may be helpful here. Also, it might be useful to try to gather a large dataset of formula-like data from the web parsed into a tree or graph structure.

Jason Rute (Sep 15 2020 at 03:28):

I can also think of a number of other possible optimizations. While having a transformer which guesses everything is a nice experiment, it might still be more efficient to fill in the constrained substitutions using a Prolog like system instead. It also might still be faster to use the theorem database more directly for lookup of theorems. For example, Google recently showed the possibility of using transformers to lookup data from a database.

Jason Rute (Sep 15 2020 at 03:28):

Finally, the holy grail is code generation. Why have an expensive black box when you can have an AI that generates code (custom tactics in this case)? This code would be reusable, fast, and interpretable. Of course, transformers are being used for code generation too. :)

Jason Rute (Sep 15 2020 at 03:29):

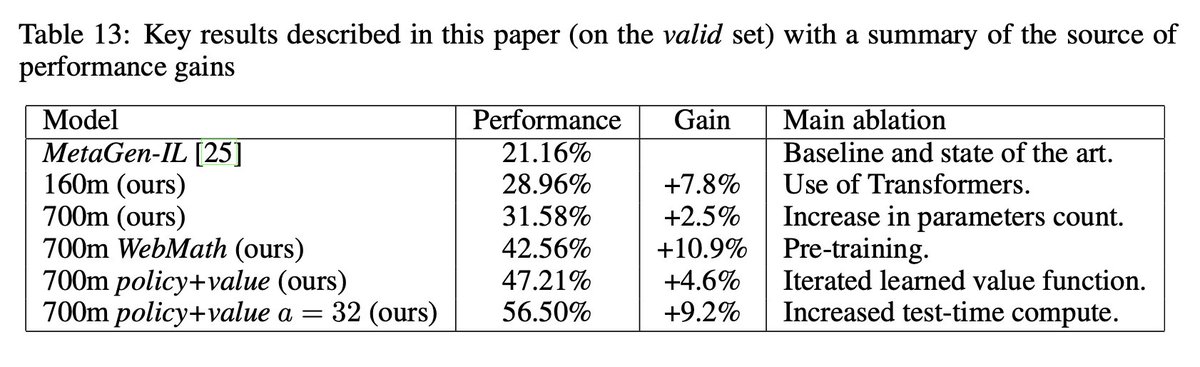

One last thought. It is so difficult to compare results since we don’t have standard datasets, but they report a success rate of 56% for their best model, which is much better than the previous SOTA of 21%. I’d love it if they try this out on the HOList dataset so that they can directly compare with Google’s state of the art. Even then however, I feel that the best judge is to put this in the hands of ITP users and to ask them what it does well on and doesn’t do well on. Again, I’m really glad for their engagement with the MetaMath community.

Jason Rute (Sep 15 2020 at 03:29):

Overall, I am really grateful for this paper. It is well-written (if you know a bit about transformers at least), and I think it shows that we have a lot of the tools at least to start building high powered experimental tools. Hopefully, we can then turn to making these systems useful to the average ITP user. I’m really looking forward to what the future brings.

Jason Rute (Sep 15 2020 at 03:39):

@Stanislas Polu can, of course, correct all my misconceptions. :)

Christian Szegedy (Sep 15 2020 at 04:32):

-

For comparison, on the HOl-Light corpus, we can reach 70% proof success rate (67% without any imitation on exsisting proofs). I'm not sure how it compares, but my guess would be that HOL-Light is a bit harder the metamath.

-

In the beginning of the year, we have also tried the approach of using transformer in autoregressive manner to generate the whole tactic invocation together with all the substitutions, theorem labels, etc. and while the results seemed somewhat comparable, it was so much more expensive to run computationally, that it just did not seem to make a lot of sense to us.

That's why we started to look for tasks where generative transformers would shine: conjecturing, filling in assumptions, etc.

I don't say that GPT-f does not make sense, we have a very similar system for more than half a year, but it was simply not justifiable from a practicabality point of view at this point in time, especially that HOLst was also criticized for being slow, while we use a few minutes per proof attempt on CPU.

Stanislas Polu (Sep 15 2020 at 07:23):

@Jason Rute thank you for the thoughtful comments. This is a great summary and glad to see our work put in perspective this way.

Commenting quickly on the 20k GPU.hours. They are required for the data generation/augmentation process (running proof searches on the train set) which is in turn used to train the value function you refer to in your post (same was true for alphago/zero, the data generation, aka exploration, is where you pay the price). So, the number is definitely accurate but just wanted to point out that the training of the model itself is less expensive (more like 500-1k GPU.hours).

As for the user experience of the ITP, I'll be demonstrating it today at AITP'20. I'll gladly make another video for folks here if interested. As you'll see the model is fast enough for it to be a somewhat pleasing experience (once you've climbed the Metamath learning curve that is :p)

(Also, I'm pretty confident OpenAI will be happy to foot the bill, for the foreseeable future, for usage of these systems once we manage to port them to Lean, as we do today with Metamath. The main challenge/problem I believe and as you point out is to make a useful system and share it effectively with the community :+1:)

Stanislas Polu (Sep 15 2020 at 07:27):

To attend the talk same as what Daniel said here. Ping me if interested, it's at ~15h CET (see AITP Program)

Johan Commelin (Sep 15 2020 at 07:28):

But do I understand correctly that for the time being we will depend on external computing power to be able to run GPT-f? You can't extract a trained artifact that I can run on a 16 GB RAM + modern desktop CPU/GPU, or can you? (And expect it to spit back results withing seconds instead of days.)

Stanislas Polu (Sep 15 2020 at 07:31):

We can extract a trained artifact that could run correctly on one modern GPU for inference. It's just that this trained artifact, today, is served through the OpenAI API.

Johan Commelin (Sep 15 2020 at 07:32):

Ok, cool

Johan Commelin (Sep 15 2020 at 07:32):

I was expecting that simply executing the thing would already require > 20GB RAM

Stanislas Polu (Sep 15 2020 at 07:32):

Not yet :p

Johan Commelin (Sep 15 2020 at 07:33):

I mean, just fitting the parameters into memory is already a mild feat (-;

Jason Rute (Sep 16 2020 at 13:03):

Christian Szegedy said:

- For comparison, on the HOl-Light corpus, we can reach 70% proof success rate (67% without any imitation on exsisting proofs).

The HOList website currently lists 60% as the best success rate. Was there anything big you did to get it to 70%/67%, or is it just combining the two techniques from your last two HOList papers (GNNs and better reinforcement learning)? Is there another HOList paper coming?

Joe Palermo (S2'17) (Oct 04 2020 at 22:15):

Hi @Stanislas Polu - I’m wondering how you converted proof step substitutions generated by your model back into MetaMath (i.e. a sequence of labels). Of course in actual MetaMath proofs one needs to construct the terms that get substituted in. Constructing a complex term could require many steps in a MetaMath proof. Which specific steps (labels) are required is represented only implicitly in the substitutions. It’s not obvious to me how to write a verifier for proof steps written in your substitution format. Can you offer any pointers? Many thanks!

Mario Carneiro (Oct 04 2020 at 23:47):

@Joe Palermo (S2'17) I can't speak for Stanislas Polu 's implementation, but it is fairly common for metamath proof assistants to work directly with intermediate statements and infer the substitutions by unification. First order unification is not a particularly hard problem, it is decidable with a fast algorithm (in contrast to higher order unification, which lean has to deal with sometimes and is undecidable in general).

Stanislas Polu (Oct 05 2020 at 07:09):

Hi @Joe Palermo (S2'17) the language model generates the substitutions, then we operate at the term level in our kernel. To ensure correctness, we indeed have to prove that expressions are properly typed, as we work on proof we do by checking that the term we operate on comply to the Metamath grammar (which is encoded by the wff, class, setvar axioms). Only when we want to check the proof with another kernel, we dump the proof in native Metamath format, using our parse trees to generate the full proofs. Hope that answers your question?

Gabriel Ebner (Oct 05 2020 at 07:10):

If I read the paper correctly, the model doesn't generate the lemma names. Do you just try all lemmas in set.mm and see which one fits the statements produced by the model?

Stanislas Polu (Oct 05 2020 at 07:13):

@Gabriel Ebner indeed we generate the theorem terms and check that they exist in set.mm. We've observed that it helps the machine learning a lot (which kind of makes sense as it makes the distribution of theorems and the distribution of term substitutions more alike and therefore easier to fit together)

Joe Palermo (S2'17) (Oct 05 2020 at 13:53):

@Stanislas Polu Yes, thank you!

Joe Palermo (S2'17) (Oct 07 2020 at 21:39):

@Stanislas Polu Would I be correct in thinking that the axioms required to define this grammar are all the axioms of the form:

<label> $a wff <expression> $.

<label> $a class <expression> $.

<label> $a setvar <expression> $. (seems that this particular pattern doesn't occur in the set.mm database)

Jason Rute (Oct 07 2020 at 22:12):

Since we are asking questions, as for @Gabriel Ebner’s question, can you look up the lemma directly from the output of GPT-f? In other words, can you plug the output into a hash map and get the lemma (maybe after standardizing variable names)? Or do you need to do the O(n) operation where you try to unify every earlier occurring lemma against the output of GPT-f.

Mario Carneiro (Oct 07 2020 at 22:19):

@Joe Palermo (S2'17) Yes, metamath terms are given by a CFG where each $a with a typecode other than |- contributes one production (and the nonterminals are wff, class, setvar). The $f variable declaration commands also contribute productions, so the setvar nonterminal is not empty, it only contains variables (as terminals).

Joe Palermo (S2'17) (Oct 07 2020 at 23:42):

@Mario Carneiro Thank you!

Joe Palermo (S2'17) (Oct 07 2020 at 23:43):

@Jason Rute I'm wondering the same thing...

Mario Carneiro (Oct 08 2020 at 01:14):

@Jason Rute This problem seems pretty similar to the problem of simp: Given a term and a collection of lemmas, find one that unifies. You can do it pretty efficiently with a discrimination tree, and in fact this process is fast enough that the mmj2 metamath proof assistant has a feature where every open goal is automatically unified against everything in the library any time you do anything, as if simp was constantly running in the background. It applies every lemma that makes the goal "smaller" in some sense, except for some blacklisted lemmas, and it's quite convenient and effective.

Stanislas Polu (Oct 08 2020 at 06:34):

@Jason Rute we're just looking up in a hash table constructed from set.mm (enforcing ordering here) :+1:

Stanislas Polu (Oct 08 2020 at 06:35):

The theorem statement generated by GPT-f is pre-unification so it's a simple lookup. GPT-f also generates substitutions that are then checked against the grammar, applied, and the fact that they unify is verified.

Stanislas Polu (Oct 08 2020 at 06:37):

@Joe Palermo (S2'17) As explained by @Mario Carneiro, yes :+1:

Joe Palermo (S2'17) (Oct 14 2020 at 16:06):

Hi @Stanislas Polu. I’m trying to replicate something similar to the MetaMath environment you developed for GPT-f. @Mario Carneiro mentioned to me that he described to you a “KLR(0)” parsing algorithm for MetaMath expressions. Was this the one you ended up implementing? In the paper you refer to a “modified LR(0) parser”.

Stanislas Polu (Oct 14 2020 at 16:42):

Yes we implemented an LR(0) parser with basic backtracking as the amount of backtracking necessary to parse the Metamath grammar is well behaved and limited in practice. It's somewhat different than what is implemented in mmj2, in case you looked into it, where the "backtracking" is done at parser construction time.

Joe Palermo (S2'17) (Oct 16 2020 at 17:01):

@Mario Carneiro would you mind sharing some of the documentation on that KLR(0) parser here?

Mario Carneiro (Oct 16 2020 at 17:18):

Sure, data dump coming right up. The following example walks through the parsing of { <. x , y >. } vs { <. x , y >. | ph } in the set.mm grammar, which yields a shift reduce conflict when parsed with LR(0). (This description assumes you know a bit about how LR(0) parse table generation works; see the wikipedia page for a primer.)

Mario Carneiro (Oct 16 2020 at 17:18):

This is the new part of the code that distinguishes the KLR parser from LR(0). A "conflict" is a place where an LR(0) parser would fail outright.

Mario Carneiro (Oct 16 2020 at 17:18):

During parse table generation each state is associated with a bunch of partially read productions that agree on a common prefix, and in this case you are stuck at the state:

-> { o <. setvar , setvar >. | wff }

-> { o class }

This is known as a shift-reduce conflict, and usually shifting is the right answer, so that's built in as a heuristic, which is why lark takes the first option over the second. But neither choice is "correct", because this changes the grammar - you are now rejecting a string that should be valid to the grammar ({ <. x , y >. } in this case) - so essentially you are finding a "closest LALR(1) approximation" to the grammar when you use lark with this heuristic.

To resolve this, the question is what to do from that state if you read a <.. We haven't actually hit the conflict yet. In the first production it's clear that we should step to -> { <. o setvar , setvar >. } | ph }, but the second production requires us to look at the class nonterminals that start with <.. (In fact, in the first state we also have all productions for the class nonterminal, like -> o 1 and -> o ( class u. class ) and many others. These are represented in a special way in LRParser.java to save space.) Let's step through the states that the example takes. The shift <. step takes us to:

-> { <. o setvar , setvar >. | wff }

-> <. o class , class >.

all setvar -> o rules

all class -> o rules

and shift x takes us to:

-> x o

Since we are now at the end of a production, we can reduce with setvar -> x at this point, and there are no competing productions so this is safe. This reduce x edge pops the stack and acts like a shift setvar edge from the previous step, leading to:

-> { <. setvar o , setvar >. | wff }

-> setvar o

The -> setvar o comes from the class -> setvar production. Now we are stuck, because we can both reduce with this production (which gives us a cv node) and shift a comma to continue with the first production. This is a shift-reduce conflict, and lark at this point will throw away the reduce option and shift here, leading to

-> { <. setvar , o setvar >. | wff }

all setvar -> o rules

which is not correct, as we have lost the ability to parse { <. x , y >. }.

Mario Carneiro (Oct 16 2020 at 17:18):

What I do instead to resolve this is "pre-compositing" the rules. We first got in trouble at

-> { <. setvar o , setvar >. | wff }

-> setvar o

which is a "bad state" because of the shift-reduce conflict. We want to remove the reduce node, and we do so by backing up to see how we got here. We obtained this state by shift setvar applied to

-> { <. o setvar , setvar >. | wff }

-> <. o class , class >.

all setvar -> o rules

all class -> o rules

and we want to repair this state so that we don't hit the train wreck one step from here. So we delete the offending rule -> o setvar and add the composition of class -> setvar with class -> <. class , class >. as a new "composite rule" which looks like a production class -> <. setvar , class >., so that the "before" state instead looks like

-> { <. o setvar , setvar >. | wff }

-> <. o class , class >.

-> <. o setvar , class >.

all setvar -> o rules

all class -> o rules except -> o setvar

and we shift setvar from here instead, getting to

-> { <. setvar o , setvar >. | wff }

-> <. setvar o , class >.

and we have safely cleared the conflict. (The modified "before" state is not a real state, it is only used to calculate this new state. This state is replacing the original shift-reduce bad state as the result of shift setvar applied to

-> { <. o setvar , setvar >. | wff }

-> <. o class , class >.

all setvar -> o rules

all class -> o rules

.)

Mario Carneiro (Oct 16 2020 at 17:19):

To finish the example off, let's make it to the end. The next step is shift , which takes us to

-> { <. setvar , o setvar >. | wff }

-> <. setvar , o class >.

all setvar -> o rules

all class -> o rules

(and shift y takes us to the simple -> y o state, so we plan to reduce there and come back here with shift setvar), and shift setvar from here takes us to

-> { <. setvar , setvar o >. | wff }

-> setvar o

which is another shift reduce conflict. Again, we analyze the conflict to find out what to composite. We want to apply the class -> setvar *production here, which was considered one step ago because closure over -> <. setvar , o class >. required us to add the -> o setvar production to the state. So we composite class -> <. setvar , class >. and class -> setvar to get a new class -> <. setvar , setvar >. production, create a temporary modified version of the previous state

-> { <. setvar , o setvar >. | wff }

-> <. setvar , o class >.

-> <. setvar , o setvar >.

all setvar -> o rules

all class -> o rules except -> o setvar

and use it to calculate the new result of shift setvar, which is

-> { <. setvar , setvar o >. | wff }

-> <. setvar , setvar o >.

and we have cleared another shift reduce conflict. There is still one more to go, though, since the next step is shift >. which takes us to

-> { <. setvar , setvar >. o | wff }

-> <. setvar , setvar >. o

which is again a shift reduce conflict. Now we must backtrack a lot, because we have to go back 5 steps (the length of the current reduce candidate) to find out which production required us to put -> <. setvar , setvar >. into the mix. In fact, five steps ago this production was not even -> <. setvar , setvar >. at all but rather -> <. class , class >. but this makes no difference, we need two productions to do the compositing. This is the first state I posted, which looks like

-> { o <. setvar , setvar >. | wff }

-> { o class }

all class -> o rules

where among the class rules is -> o <. class , class >.. The reason the class rules are there is because of the -> { o class } rule, so we composite class -> { class } with class -> <. setvar , setvar >. to get the temporary state

-> { o <. setvar , setvar >. | wff }

-> { o class }

-> { o <. setvar , setvar >. }

all class -> o rules except -> o <. class , class >.

and now shift 5 steps forward along <. setvar , setvar >. to get the repaired state

-> { <. setvar , setvar >. o | wff }

-> { <. setvar , setvar >. o }

Now we have finally cleared the last hurdle, as we can clearly now either shift | or shift } depending on what we see next to parse both { <. x , y >. | ph } and { <. x , y >. }. For the purpose of the example let's say we shift } so we get to state

-> { <. setvar , setvar >. } o

and a reduce is unambiguous.

Mario Carneiro (Oct 16 2020 at 17:19):

But what are we reducing anyway? I've been talking about compositing rules, and what I haven't been showing here is that each production is associated to a partial expression tree. You can imagine them as lambda expressions. The original rules from the grammar will have a result like \e1 e2. (cpr e1 e2) for the production class -> <. class , class >., which is to say that we take the two class expressions in the brackets and put them into the two arguments of a cpr node. The arguments aren't always in parse order, for example I think wal takes its arguments in the order wal ph x (because the $f variable declaration of vx comes after wph), so the production wff -> A. setvar wff has result \e1 e2. (wal e2 e1).

Now compositing rules has the effect of a composition of these two expressions. In the first part we composited class -> <. class , class >. with class -> setvar, with associated results \e1 e2. (cpr e1 e2) and \e1. (cv e1), so we insert the cv expression in for e1 of the cpr expression to get a new result \e1 e2. (cpr (cv e1) e2) for the new production class -> <. setvar , class >.. Similarly, the production class -> <. setvar , setvar >. is formed by inserting cv in for e2 in this new production, resulting in \e1 e2. (cpr (cv e1) (cv e2)). And finally, we composited this expression with the class -> { class } production with result \e1. (csn e1), and this composition yields, for the composite rule class -> { <. setvar , setvar >. }, the result \e1 e2. (csn (cpr (cv e1) (cv e2))). This is what we reduce with.

So for the example { <. x , y >. }, we first reduce using setvar -> x := vx, then setvar -> y := vy, then class -> { <. setvar , setvar >. } := \e1 e2. (csn (cpr (cv e1) (cv e2))) to get the final parse tree (csn (cpr (cv vx) (cv vy))).

Joe Palermo (S2'17) (Oct 19 2020 at 12:55):

@Mario Carneiro Thanks very much! Might take me some time to wrap my head around this since I don't know much about parsers. I'll get back to you if I have questions.

Mario Carneiro (Oct 19 2020 at 12:55):

It is a repost, so the context might not be exactly right for the venue. Feel free to ask if something is out of context

Brando Miranda (May 24 2021 at 12:16):

I'm curious @Stanislas Polu how different/similar is GPT-f vs the GPT used for coding (https://analyticsindiamag.com/open-ai-gpt-3-code-generator-app-building/)?

Stanislas Polu (May 24 2021 at 13:33):

@Brando Miranda the architecture is the same (smaller model similar in size to GPT-2) but the training objective is quite different (see section 4.2) :+1:

Brando Miranda (May 24 2021 at 15:01):

Stanislas Polu said:

Brando Miranda the architecture is the same (smaller model similar in size to GPT-2) but the training objective is quite different (see section 4.2) :+1:

Thanks Stanslas! I appreciate the message. I will check that out.

Out of curiosity, is there a reason you prefered a transformer model e.g. GPT than an enumerator with a neural recognition model (e.g. as in Dreamcoder, Deepcoder, etc. related work in that path)

Stanislas Polu (May 24 2021 at 16:27):

Wellll... Dreamcoder and GPT-f objective are not too dissimilar.

You could view proof search as the wake phase, the model conjecturing capabilities (generating theorem statements during proof search that are not part of the formal library) as the abstraction phase and attempting to prove them as the dream phase.

Jason Rute (May 26 2021 at 01:45):

Brando Miranda said:

I'm curious Stanislas Polu how different/similar is GPT-f vs the GPT used for coding (https://analyticsindiamag.com/open-ai-gpt-3-code-generator-app-building/)?

You probably know this, but my understanding of GPT-3 applications, is one doesn't retrain the model. Instead they come up with a prompt to extract information out of the model. For example to find the capital of England, you would give GPT-3 a prompt like `France => Paris, China => Beijing, England => " and the model will complete the string with "London". It's crazy, but with good prompt engineering, you can take this really far. In this way, GPT-3 can code since there was a lot of code (using standard programming languages) in the training data, and all one has to do if find a good way to engineer prompts.

GPT-f (as Stan said) uses a smaller model than GPT-3 (more like GPT-2) and therefore can be fine-tuned as is standard with GPT-2 (and BERT) applications. GPT-f was pretrained on web text and web math, and we fine tune it with tasks like GOAL P Q : Prop ⊢ ((P → Q) → P) → P PROOFSTEP apply or.elim (em P), following the pattern GOAL <TacticState> PROOFSTEP <Tactic>. When using it to predict tactics, you give it the prompt GOAL <TacticState> PROOFSTEP and it fills in the tactic. (The main insight in our paper is that we get a big boost by also co-training it with a number of other tasks. These tasks are not used for prediction, but nontheless, really help our model. The details are in the paper.)

Last updated: Feb 28 2026 at 14:05 UTC