4. Sets and Functions

The vocabulary of sets, relations, and functions provides a uniform language for carrying out constructions in all the branches of mathematics. Since functions and relations can be defined in terms of sets, axiomatic set theory can be used as a foundation for mathematics.

Lean’s foundation is based instead on the primitive notion of a type,

and it includes ways of defining functions between types.

Every expression in Lean has a type:

there are natural numbers, real numbers, functions from reals to reals,

groups, vector spaces, and so on.

Some expressions are types,

which is to say,

their type is Type.

Lean and mathlib provide ways of defining new types,

and ways of defining objects of those types.

Conceptually, you can think of a type as just a set of objects.

Requiring every object to have a type has some advantages.

For example, it makes it possible to overload notation like +,

and it sometimes makes input less verbose

because Lean can infer a lot of information from

an object’s type.

The type system also enables Lean to flag errors when you

apply a function to the wrong number of arguments,

or apply a function to arguments of the wrong type.

Lean’s library does define elementary set-theoretic notions. In contrast to set theory, in Lean a set is always a set of objects of some type, such as a set natural numbers or a set of functions from real numbers to real numbers. The distinction between types and set takes some getting used to, but this chapter will take you through the essentials.

4.1. Sets

If α is any type, the type set α consists of sets

of elements of α.

This type supports the usual set-theoretic operations and relations.

For example, s ⊆ t says that s is a subset of t,

s ∩ t denotes the intersection of s and t,

and s ∪ t denotes their union.

The subset relation can be typed with \ss or \sub,

intersection can be typed with \i or \cap,

and union can be typed with \un or \cup.

The library also defines the set univ,

which consists of all the elements of type α,

and the empty set, ∅, which can be typed as \empty.

Given x : α and s : set α,

the expression x ∈ s says that x is a member of s.

Theorems that mention set membership often include mem

in their name.

The expression x ∉ s abbreviates ¬ x ∈ s.

You can type ∈ as \in or \mem and ∉ as \notin.

One way to prove things about sets is to use rw

or the simplifier to expand the definitions.

In the second example below, we use simp only

to tell the simplifier to use only the list

of identities we give it,

and not its full database of identities.

Unlike rw, simp can perform simplifications

inside a universal or existential quantifier.

If you step through the proof,

you can see the effects of these commands.

variable {α : Type*}

variables (s t u : set α)

open set

example (h : s ⊆ t) : s ∩ u ⊆ t ∩ u :=

begin

rw [subset_def, inter_def, inter_def],

rw subset_def at h,

dsimp,

rintros x ⟨xs, xu⟩,

exact ⟨h _ xs, xu⟩,

end

example (h : s ⊆ t) : s ∩ u ⊆ t ∩ u :=

begin

simp only [subset_def, mem_inter_iff] at *,

rintros x ⟨xs, xu⟩,

exact ⟨h _ xs, xu⟩,

end

In this example, we open the set namespace to have

access to the shorter names for the theorems.

But, in fact, we can delete the calls to rw and simp

entirely:

example (h : s ⊆ t) : s ∩ u ⊆ t ∩ u :=

begin

intros x xsu,

exact ⟨h xsu.1, xsu.2⟩

end

What is going on here is known as definitional reduction:

to make sense of the intros command and the anonymous constructors

Lean is forced to expand the definitions.

The following examples also illustrate the phenomenon:

theorem foo (h : s ⊆ t) : s ∩ u ⊆ t ∩ u :=

λ x ⟨xs, xu⟩, ⟨h xs, xu⟩

example (h : s ⊆ t) : s ∩ u ⊆ t ∩ u :=

by exact λ x ⟨xs, xu⟩, ⟨h xs, xu⟩

Due to a quirk of how Lean processes its input,

the first example fails if we replace theorem foo with example.

This illustrates the pitfalls of relying on definitional reduction

too heavily.

It is often convenient,

but sometimes we have to fall back on unfolding definitions manually.

To deal with unions, we can use set.union_def and set.mem_union.

Since x ∈ s ∪ t unfolds to x ∈ s ∨ x ∈ t,

we can also use the cases tactic to force a definitional reduction.

example : s ∩ (t ∪ u) ⊆ (s ∩ t) ∪ (s ∩ u) :=

begin

intros x hx,

have xs : x ∈ s := hx.1,

have xtu : x ∈ t ∪ u := hx.2,

cases xtu with xt xu,

{ left,

show x ∈ s ∩ t,

exact ⟨xs, xt⟩ },

right,

show x ∈ s ∩ u,

exact ⟨xs, xu⟩

end

Since intersection binds tighter than union,

the use of parentheses in the expression (s ∩ t) ∪ (s ∩ u)

is unnecessary, but they make the meaning of the expression clearer.

The following is a shorter proof of the same fact:

example : s ∩ (t ∪ u) ⊆ (s ∩ t) ∪ (s ∩ u) :=

begin

rintros x ⟨xs, xt | xu⟩,

{ left, exact ⟨xs, xt⟩ },

right, exact ⟨xs, xu⟩

end

As an exercise, try proving the other inclusion:

example : (s ∩ t) ∪ (s ∩ u) ⊆ s ∩ (t ∪ u):=

sorry

It might help to know that when using rintros,

sometimes we need to use parentheses around a disjunctive pattern

h1 | h2 to get Lean to parse it correctly.

The library also defines set difference, s \ t,

where the backslash is a special unicode character

entered as \\.

The expression x ∈ s \ t expands to x ∈ s ∧ x ∉ t.

(The ∉ can be entered as \notin.)

It can be rewritten manually using set.diff_eq and dsimp

or set.mem_diff,

but the following two proofs of the same inclusion

show how to avoid using them.

example : s \ t \ u ⊆ s \ (t ∪ u) :=

begin

intros x xstu,

have xs : x ∈ s := xstu.1.1,

have xnt : x ∉ t := xstu.1.2,

have xnu : x ∉ u := xstu.2,

split,

{ exact xs }, dsimp,

intro xtu, -- x ∈ t ∨ x ∈ u

cases xtu with xt xu,

{ show false, from xnt xt },

show false, from xnu xu

end

example : s \ t \ u ⊆ s \ (t ∪ u) :=

begin

rintros x ⟨⟨xs, xnt⟩, xnu⟩,

use xs,

rintros (xt | xu); contradiction

end

As an exercise, prove the reverse inclusion:

example : s \ (t ∪ u) ⊆ s \ t \ u :=

sorry

To prove that two sets are equal,

it suffices to show that every element of one is an element

of the other.

This principle is known as “extensionality,”

and, unsurprisingly,

the ext tactic is equipped to handle it.

example : s ∩ t = t ∩ s :=

begin

ext x,

simp only [mem_inter_iff],

split,

{ rintros ⟨xs, xt⟩, exact ⟨xt, xs⟩ },

rintros ⟨xt, xs⟩, exact ⟨xs, xt⟩

end

Once again, deleting the line simp only [mem_inter_iff]

does not harm the proof.

In fact, if you like inscrutable proof terms,

the following one-line proof is for you:

example : s ∩ t = t ∩ s :=

set.ext $ λ x, ⟨λ ⟨xs, xt⟩, ⟨xt, xs⟩, λ ⟨xt, xs⟩, ⟨xs, xt⟩⟩

The dollar sign is a useful syntax:

writing f $ ...

is essentially the same as writing f (...),

but it saves us the trouble of having to close

a set of parentheses at the end of a long expression.

Here is an even shorter proof,

using the simplifier:

example : s ∩ t = t ∩ s :=

by ext x; simp [and.comm]

An alternative to using ext is to use

the theorem subset.antisymm

which allows us to prove an equation s = t

between sets by proving s ⊆ t and t ⊆ s.

example : s ∩ t = t ∩ s :=

begin

apply subset.antisymm,

{ rintros x ⟨xs, xt⟩, exact ⟨xt, xs⟩ },

rintros x ⟨xt, xs⟩, exact ⟨xs, xt⟩

end

Try finishing this proof term:

example : s ∩ t = t ∩ s :=

subset.antisymm sorry sorry

Remember that you can replace sorry by an underscore, and when you hover over it, Lean will show you what it expects at that point.

Here are some set-theoretic identities you might enjoy proving:

example : s ∩ (s ∪ t) = s :=

sorry

example : s ∪ (s ∩ t) = s :=

sorry

example : (s \ t) ∪ t = s ∪ t :=

sorry

example : (s \ t) ∪ (t \ s) = (s ∪ t) \ (s ∩ t) :=

sorry

When it comes to representing sets,

here is what is going on underneath the hood.

In type theory, a property or predicate on a type α

is just a function P : α → Prop.

This makes sense:

given a : α, P a is just the proposition

that P holds of a.

In the library, set α is defined to be α → Prop and x ∈ s is defined to be s x.

In other words, sets are really properties, treated as objects.

The library also defines set-builder notation.

The expression { y | P y } unfolds to (λ y, P y),

so x ∈ { y | P y } reduces to P x.

So we can turn the property of being even into the set of even numbers:

def evens : set ℕ := {n | even n}

def odds : set ℕ := {n | ¬ even n}

example : evens ∪ odds = univ :=

begin

rw [evens, odds],

ext n,

simp,

apply classical.em

end

You should step through this proof and make sure

you understand what is going on.

Try deleting the line rw [evens, odds]

and confirm that the proof still works.

In fact, set-builder notation is used to define

s ∩ tas{x | x ∈ s ∧ x ∈ t},s ∪ tas{x | x ∈ s ∨ x ∈ t},∅as{x | false}, andunivas{x | true}.

We often need to indicate the type of ∅ and univ

explicitly,

because Lean has trouble guessing which ones we mean.

The following examples show how Lean unfolds the last

two definitions when needed. In the second one,

trivial is the canonical proof of true in the library.

example (x : ℕ) (h : x ∈ (∅ : set ℕ)) : false :=

h

example (x : ℕ) : x ∈ (univ : set ℕ) :=

trivial

As an exercise, prove the following inclusion.

Use intro n to unfold the definition of subset,

and use the simplifier to reduce the

set-theoretic constructions to logic.

We also recommend using the theorems

nat.prime.eq_two_or_odd and nat.even_iff.

example : { n | nat.prime n } ∩ { n | n > 2} ⊆ { n | ¬ even n } :=

sorry

Be careful: it is somewhat confusing that the library has multiple versions

of the predicate prime.

The most general one makes sense in any commutative monoid with a zero element.

The predicate nat.prime is specific to the natural numbers.

Fortunately, there is a theorem that says that in the specific case,

the two notions agree, so you can always rewrite one to the other.

#print prime

#print nat.prime

example (n : ℕ) : prime n ↔ nat.prime n := nat.prime_iff.symm

example (n : ℕ) (h : prime n) : nat.prime n :=

by { rw nat.prime_iff, exact h }

The rwa tactic follows a rewrite with the assumption tactic.

example (n : ℕ) (h : prime n) : nat.prime n :=

by rwa nat.prime_iff

Lean introduces the notation ∀ x ∈ s, ...,

“for every x in s .,”

as an abbreviation for ∀ x, x ∈ s → ....

It also introduces the notation ∃ x ∈ s, ...,

“there exists an x in s such that ..”

These are sometimes known as bounded quantifiers,

because the construction serves to restrict their significance

to the set s.

As a result, theorems in the library that make use of them

often contain ball or bex in the name.

The theorem bex_def asserts that ∃ x ∈ s, ... is equivalent

to ∃ x, x ∈ s ∧ ...,

but when they are used with rintros, use,

and anonymous constructors,

these two expressions behave roughly the same.

As a result, we usually don’t need to use bex_def

to transform them explicitly.

Here is are some examples of how they are used:

variables (s t : set ℕ)

example (h₀ : ∀ x ∈ s, ¬ even x) (h₁ : ∀ x ∈ s, prime x) :

∀ x ∈ s, ¬ even x ∧ prime x :=

begin

intros x xs,

split,

{ apply h₀ x xs },

apply h₁ x xs

end

example (h : ∃ x ∈ s, ¬ even x ∧ prime x) :

∃ x ∈ s, prime x :=

begin

rcases h with ⟨x, xs, _, prime_x⟩,

use [x, xs, prime_x]

end

See if you can prove these slight variations:

section

variable (ssubt : s ⊆ t)

include ssubt

example (h₀ : ∀ x ∈ t, ¬ even x) (h₁ : ∀ x ∈ t, prime x) :

∀ x ∈ s, ¬ even x ∧ prime x :=

sorry

example (h : ∃ x ∈ s, ¬ even x ∧ prime x) :

∃ x ∈ t, prime x :=

sorry

end

The include command is needed because ssubt does not

appear in the statement of the theorem.

Lean does not look inside tactic blocks when it decides

what variables and hypotheses to include,

so if you delete that line,

you will not see the hypothesis within a begin .end proof.

If you are proving theorems in a library,

you can delimit the scope of and include by putting it

between section and end,

so that later theorems do not include it as an unnecessary hypothesis.

Indexed unions and intersections are

another important set-theoretic construction.

We can model a sequence \(A_0, A_1, A_2, \ldots\) of sets of

elements of α

as a function A : ℕ → set α,

in which case ⋃ i, A i denotes their union,

and ⋂ i, A i denotes their intersection.

There is nothing special about the natural numbers here,

so ℕ can be replaced by any type I

used to index the sets.

The following illustrates their use.

variables {α I : Type*}

variables A B : I → set α

variable s : set α

open set

example : s ∩ (⋃ i, A i) = ⋃ i, (A i ∩ s) :=

begin

ext x,

simp only [mem_inter_iff, mem_Union],

split,

{ rintros ⟨xs, ⟨i, xAi⟩⟩,

exact ⟨i, xAi, xs⟩ },

rintros ⟨i, xAi, xs⟩,

exact ⟨xs, ⟨i, xAi⟩⟩

end

example : (⋂ i, A i ∩ B i) = (⋂ i, A i) ∩ (⋂ i, B i) :=

begin

ext x,

simp only [mem_inter_iff, mem_Inter],

split,

{ intro h,

split,

{ intro i,

exact (h i).1 },

intro i,

exact (h i).2 },

rintros ⟨h1, h2⟩ i,

split,

{ exact h1 i },

exact h2 i

end

Parentheses are often needed with an indexed union or intersection because, as with the quantifiers, the scope of the bound variable extends as far as it can.

Try proving the following identity.

One direction requires classical logic!

We recommend using by_cases xs : x ∈ s

at an appropriate point in the proof.

open_locale classical

example : s ∪ (⋂ i, A i) = ⋂ i, (A i ∪ s) :=

sorry

Mathlib also has bounded unions and intersections,

which are analogous to the bounded quantifiers.

You can unpack their meaning with mem_Union₂

and mem_Inter₂.

As the following examples show,

Lean’s simplifier carries out these replacements as well.

def primes : set ℕ := {x | nat.prime x}

example : (⋃ p ∈ primes, {x | p^2 ∣ x}) = {x | ∃ p ∈ primes, p^2 ∣ x} :=

by { ext, rw mem_Union₂, refl }

example : (⋃ p ∈ primes, {x | p^2 ∣ x}) = {x | ∃ p ∈ primes, p^2 ∣ x} :=

by { ext, simp }

example : (⋂ p ∈ primes, {x | ¬ p ∣ x}) ⊆ {x | x = 1} :=

begin

intro x,

contrapose!,

simp,

apply nat.exists_prime_and_dvd

end

Try solving the following example, which is similar.

If you start typing eq_univ,

tab completion will tell you that apply eq_univ_of_forall

is a good way to start the proof.

We also recommend using the theorem nat.exists_infinite_primes.

example : (⋃ p ∈ primes, {x | x ≤ p}) = univ :=

sorry

Give a collection of sets, s : set (set α),

their union, ⋃₀ s, has type set α

and is defined as {x | ∃ t ∈ s, x ∈ t}.

Similarly, their intersection, ⋂₀ s, is defined as

{x | ∀ t ∈ s, x ∈ t}.

These operations are called sUnion and sInter, respectively.

The following examples show their relationship to bounded union

and intersection.

variables {α : Type*} (s : set (set α))

example : ⋃₀ s = ⋃ t ∈ s, t :=

begin

ext x,

rw mem_Union₂,

refl

end

example : ⋂₀ s = ⋂ t ∈ s, t :=

begin

ext x,

rw mem_Inter₂,

refl

end

In the library, these identities are called

sUnion_eq_bUnion and sInter_eq_bInter.

4.2. Functions

If f : α → β is a function and p is a set of

elements of type β,

the library defines preimage f p, written f ⁻¹' p,

to be {x | f x ∈ p}.

The expression x ∈ f ⁻¹' p reduces to f x ∈ p.

This is often convenient, as in the following example:

variables {α β : Type*}

variable f : α → β

variables s t : set α

variables u v : set β

open function

open set

example : f ⁻¹' (u ∩ v) = f ⁻¹' u ∩ f ⁻¹' v :=

by { ext, refl }

If s is a set of elements of type α,

the library also defines image f s,

written f '' s,

to be {y | ∃ x, x ∈ s ∧ f x = y}.

So a hypothesis y ∈ f '' s decomposes to a triple

⟨x, xs, xeq⟩ with x : α satisfying the hypotheses xs : x ∈ s

and xeq : f x = y.

The rfl tag in the rintros tactic (see Section 3.2) was made precisely

for this sort of situation.

example : f '' (s ∪ t) = f '' s ∪ f '' t :=

begin

ext y, split,

{ rintros ⟨x, xs | xt, rfl⟩,

{ left, use [x, xs] },

right, use [x, xt] },

rintros (⟨x, xs, rfl⟩ | ⟨x, xt, rfl⟩),

{ use [x, or.inl xs] },

use [x, or.inr xt]

end

Notice also that the use tactic applies refl

to close goals when it can.

Here is another example:

example : s ⊆ f ⁻¹' (f '' s) :=

begin

intros x xs,

show f x ∈ f '' s,

use [x, xs]

end

We can replace the line use [x, xs] by

apply mem_image_of_mem f xs if we want to

use a theorem specifically designed for that purpose.

But knowing that the image is defined in terms

of an existential quantifier is often convenient.

The following equivalence is a good exercise:

example : f '' s ⊆ v ↔ s ⊆ f ⁻¹' v :=

sorry

It shows that image f and preimage f are

an instance of what is known as a Galois connection

between set α and set β,

each partially ordered by the subset relation.

In the library, this equivalence is named

image_subset_iff.

In practice, the right-hand side is often the

more useful representation,

because y ∈ f ⁻¹' t unfolds to f y ∈ t

whereas working with x ∈ f '' s requires

decomposing an existential quantifier.

Here is a long list of set-theoretic identities for you to enjoy. You don’t have to do all of them at once; do a few of them, and set the rest aside for a rainy day.

example (h : injective f) : f ⁻¹' (f '' s) ⊆ s :=

sorry

example : f '' (f⁻¹' u) ⊆ u :=

sorry

example (h : surjective f) : u ⊆ f '' (f⁻¹' u) :=

sorry

example (h : s ⊆ t) : f '' s ⊆ f '' t :=

sorry

example (h : u ⊆ v) : f ⁻¹' u ⊆ f ⁻¹' v :=

sorry

example : f ⁻¹' (u ∪ v) = f ⁻¹' u ∪ f ⁻¹' v :=

sorry

example : f '' (s ∩ t) ⊆ f '' s ∩ f '' t :=

sorry

example (h : injective f) : f '' s ∩ f '' t ⊆ f '' (s ∩ t) :=

sorry

example : f '' s \ f '' t ⊆ f '' (s \ t) :=

sorry

example : f ⁻¹' u \ f ⁻¹' v ⊆ f ⁻¹' (u \ v) :=

sorry

example : f '' s ∩ v = f '' (s ∩ f ⁻¹' v) :=

sorry

example : f '' (s ∩ f ⁻¹' u) ⊆ f '' s ∪ u :=

sorry

example : s ∩ f ⁻¹' u ⊆ f ⁻¹' (f '' s ∩ u) :=

sorry

example : s ∪ f ⁻¹' u ⊆ f ⁻¹' (f '' s ∪ u) :=

sorry

You can also try your hand at the next group of exercises,

which characterize the behavior of images and preimages

with respect to indexed unions and intersections.

In the third exercise, the argument i : I is needed

to guarantee that the index set is nonempty.

To prove any of these, we recommend using ext or intro

to unfold the meaning of an equation or inclusion between sets,

and then calling simp to unpack the conditions for membership.

variables {I : Type*} (A : I → set α) (B : I → set β)

example : f '' (⋃ i, A i) = ⋃ i, f '' A i :=

begin

ext y, simp,

split,

{ rintros ⟨x, ⟨i, xAi⟩, fxeq⟩,

use [i, x, xAi, fxeq] },

rintros ⟨i, x, xAi, fxeq⟩,

exact ⟨x, ⟨i, xAi⟩, fxeq⟩

end

example : f '' (⋂ i, A i) ⊆ ⋂ i, f '' A i :=

begin

intro y, simp,

intros x h fxeq i,

use [x, h i, fxeq],

end

example (i : I) (injf : injective f) :

(⋂ i, f '' A i) ⊆ f '' (⋂ i, A i) :=

begin

intro y, simp,

intro h,

rcases h i with ⟨x, xAi, fxeq⟩,

use x, split,

{ intro i',

rcases h i' with ⟨x', x'Ai, fx'eq⟩,

have : f x = f x', by rw [fxeq, fx'eq],

have : x = x', from injf this,

rw this,

exact x'Ai },

exact fxeq

end

example : f ⁻¹' (⋃ i, B i) = ⋃ i, f ⁻¹' (B i) :=

by { ext x, simp }

example : f ⁻¹' (⋂ i, B i) = ⋂ i, f ⁻¹' (B i) :=

by { ext x, simp }

The library defines a predicate inj_on f s to say that

f is injective on s.

It is defined as follows:

example : inj_on f s ↔

∀ x₁ ∈ s, ∀ x₂ ∈ s, f x₁ = f x₂ → x₁ = x₂ :=

iff.refl _

The statement injective f is provably equivalent

to inj_on f univ.

Similarly, the library defines range f to be

{x | ∃y, f y = x},

so range f is provably equal to f '' univ.

This is a common theme in mathlib:

although many properties of functions are defined relative

to their full domain,

there are often relativized versions that restrict

the statements to a subset of the domain type.

Here is are some examples of inj_on and range in use:

open set real

example : inj_on log { x | x > 0 } :=

begin

intros x xpos y ypos,

intro e, -- log x = log y

calc

x = exp (log x) : by rw exp_log xpos

... = exp (log y) : by rw e

... = y : by rw exp_log ypos

end

example : range exp = { y | y > 0 } :=

begin

ext y, split,

{ rintros ⟨x, rfl⟩,

apply exp_pos },

intro ypos,

use log y,

rw exp_log ypos

end

Try proving these:

example : inj_on sqrt { x | x ≥ 0 } :=

sorry

example : inj_on (λ x, x^2) { x : ℝ | x ≥ 0 } :=

sorry

example : sqrt '' { x | x ≥ 0 } = {y | y ≥ 0} :=

sorry

example : range (λ x, x^2) = {y : ℝ | y ≥ 0} :=

sorry

To define the inverse of a function f : α → β,

we will use two new ingredients.

First, we need to deal with the fact that

an arbitrary type in Lean may be empty.

To define the inverse to f at y when there is

no x satisfying f x = y,

we want to assign a default value in α.

Adding the annotation [inhabited α] as a variable

is tantamount to assuming that α has a

preferred element, which is denoted default.

Second, in the case where there is more than one x

such that f x = y,

the inverse function needs to choose one of them.

This requires an appeal to the axiom of choice.

Lean allows various ways of accessing it;

one convenient method is to use the classical some

operator, illustrated below.

variables {α β : Type*} [inhabited α]

#check (default : α)

variables (P : α → Prop) (h : ∃ x, P x)

#check classical.some h

example : P (classical.some h) := classical.some_spec h

Given h : ∃ x, P x, the value of classical.some h

is some x satisfying P x.

The theorem classical.some_spec h says that classical.some h

meets this specification.

With these in hand, we can define the inverse function as follows:

noncomputable theory

open_locale classical

def inverse (f : α → β) : β → α :=

λ y : β, if h : ∃ x, f x = y then classical.some h else default

theorem inverse_spec {f : α → β} (y : β) (h : ∃ x, f x = y) :

f (inverse f y) = y :=

begin

rw inverse, dsimp, rw dif_pos h,

exact classical.some_spec h

end

The lines noncomputable theory and open_locale classical

are needed because we are using classical logic in an essential way.

On input y, the function inverse f

returns some value of x satisfying f x = y if there is one,

and a default element of α otherwise.

This is an instance of a dependent if construction,

since in the positive case, the value returned,

classical.some h, depends on the assumption h.

The identity dif_pos h rewrites if h : e then a else b

to a given h : e,

and, similarly, dif_neg h rewrites it to b given h : ¬ e.

The theorem inverse_spec says that inverse f

meets the first part of this specification.

Don’t worry if you do not fully understand how these work.

The theorem inverse_spec alone should be enough to show

that inverse f is a left inverse if and only if f is injective

and a right inverse if and only if f is surjective.

Look up the definition of left_inverse and right_inverse

by double-clicking or right-clicking on them in VS Code,

or using the commands #print left_inverse and #print right_inverse.

Then try to prove the two theorems.

They are tricky!

It helps to do the proofs on paper before

you start hacking through the details.

You should be able to prove each of them with about a half-dozen

short lines.

If you are looking for an extra challenge,

try to condense each proof to a single-line proof term.

variable f : α → β

open function

example : injective f ↔ left_inverse (inverse f) f :=

sorry

example : surjective f ↔ right_inverse (inverse f) f :=

sorry

We close this section with a type-theoretic statement of Cantor’s famous theorem that there is no surjective function from a set to its power set. See if you can understand the proof, and then fill in the two lines that are missing.

theorem Cantor : ∀ f : α → set α, ¬ surjective f :=

begin

intros f surjf,

let S := { i | i ∉ f i},

rcases surjf S with ⟨j, h⟩,

have h₁ : j ∉ f j,

{ intro h',

have : j ∉ f j,

{ by rwa h at h' },

contradiction },

have h₂ : j ∈ S,

sorry,

have h₃ : j ∉ S,

sorry,

contradiction

end

4.3. The Schröder-Bernstein Theorem

We close this chapter with an elementary but nontrivial theorem of set theory. Let \(\alpha\) and \(\beta\) be sets. (In our formalization, they will actually be types.) Suppose \(f : \alpha → \beta\) and \(g : \beta → \alpha\) are both injective. Intuitively, this means that \(\alpha\) is no bigger than \(\beta\) and vice-versa. If \(\alpha\) and \(\beta\) are finite, this implies that they have the same cardinality, which is equivalent to saying that there is a bijection between them. In the nineteenth century, Cantor stated that same result holds even in the case where \(\alpha\) and \(\beta\) are infinite. This was eventually established by Dedekind, Schröder, and Bernstein independently.

Our formalization will introduce some new methods that we will explain in greater detail in chapters to come. Don’t worry if they go by too quickly here. Our goal is to show you that you already have the skills to contribute to the formal proof of a real mathematical result.

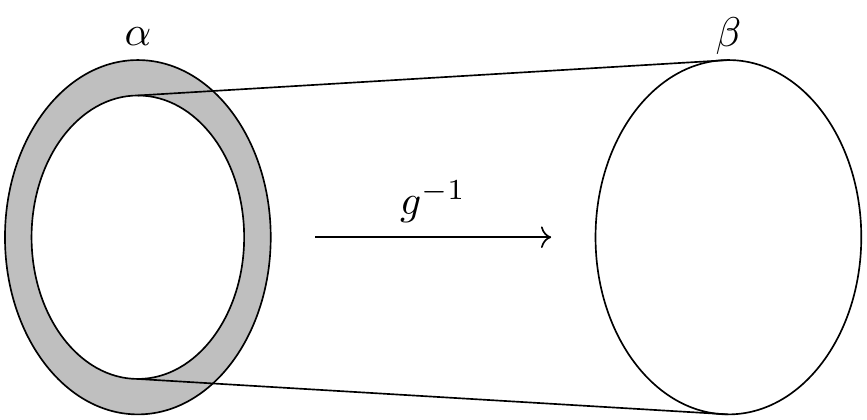

To understand the idea behind the proof, consider the image of the map \(g\) in \(\alpha\). On that image, the inverse of \(g\) is defined and is a bijection with \(\beta\).

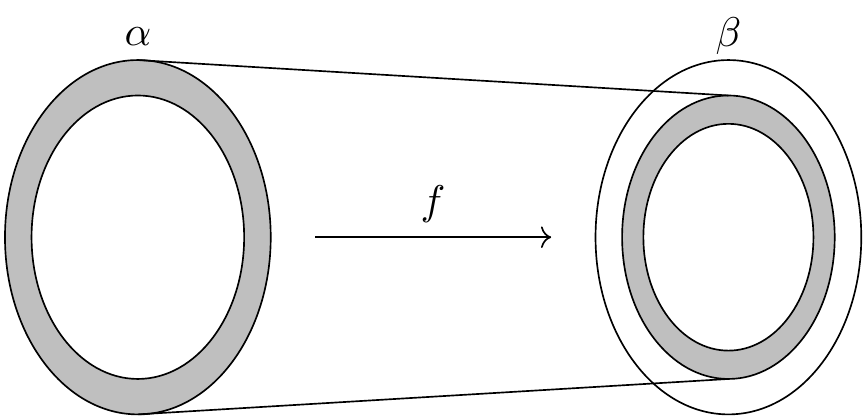

The problem is that the bijection does not include the shaded region in the diagram, which is nonempty if \(g\) is not surjective. Alternatively, we can use \(f\) to map all of \(\alpha\) to \(\beta\), but in that case the problem is that if \(f\) is not surjective, it will miss some elements of \(\beta\).

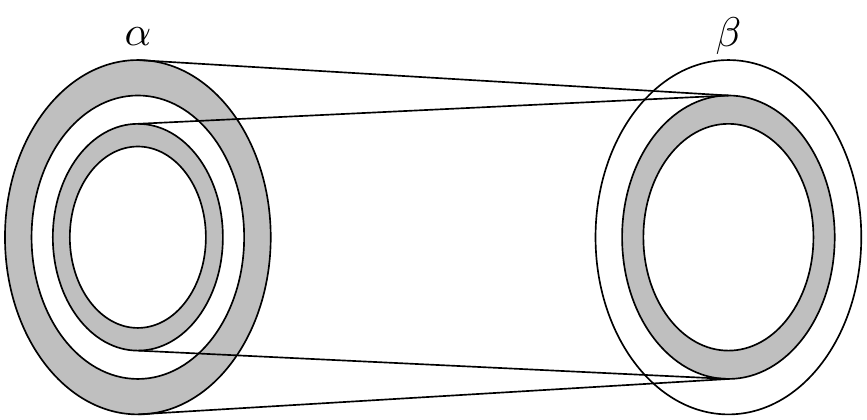

But now consider the composition \(g \circ f\) from \(\alpha\) to itself. Because the composition is injective, it forms a bijection between \(\alpha\) and its image, yielding a scaled-down copy of \(\alpha\) inside itself.

This composition maps the inner shaded ring to yet another such set, which we can think of as an even smaller concentric shaded ring, and so on. This yields a concentric sequence of shaded rings, each of which is in bijective correspondence with the next. If we map each ring to the next and leave the unshaded parts of \(\alpha\) alone, we have a bijection of \(\alpha\) with the image of \(g\). Composing with \(g^{-1}\), this yields the desired bijection between \(\alpha\) and \(\beta\).

We can describe this bijection more simply. Let \(A\) be the union of the sequence of shaded regions, and define \(h : \alpha \to \beta\) as follows:

In other words, we use \(f\) on the shaded parts, and we use the inverse of \(g\) everywhere else. The resulting map \(h\) is injective because each component is injective and the images of the two components are disjoint. To see that it is surjective, suppose we are given a \(y\) in \(\beta\), and consider \(g(y)\). If \(g(y)\) is in one of the shaded regions, it cannot be in the first ring, so we have \(g(y) = g(f(x))\) for some \(x\) is in the previous ring. By the injectivity of \(g\), we have \(h(x) = f(x) = y\). If \(g(y)\) is not in the shaded region, then by the definition of \(h\), we have \(h(g(y))= y\). Either way, \(y\) is in the image of \(h\).

This argument should sound plausible, but the details are delicate. Formalizing the proof will not only improve our confidence in the result, but also help us understand it better. Because the proof uses classical logic, we tell Lean that our definitions will generally not be computable.

noncomputable theory

open_locale classical

variables {α β : Type*} [nonempty β]

The annotation [nonempty β] specifies that β is nonempty.

We use it because the mathlib primitive that we will use to

construct \(g^{-1}\) requires it.

The case of the theorem where \(\beta\) is empty is trivial,

and even though it would not be hard to generalize the formalization to cover

that case as well, we will not bother.

Specifically, we need the hypothesis [nonempty β] for the operation

inv_fun that is defined in mathlib.

Given x : α, inv_fun g x chooses a preimage of x

in β if there is one,

and returns an arbitrary element of β otherwise.

The function inv_fun g is always a left inverse if g is injective

and a right inverse if g is surjective.

#check (inv_fun g : α → β)

#check (left_inverse_inv_fun : injective g → left_inverse (inv_fun g) g)

#check (left_inverse_inv_fun : injective g → ∀ y, inv_fun g (g y) = y)

#check (inv_fun_eq : (∃ y, g y = x) → g (inv_fun g x) = x)

We define the set corresponding to the union of the shaded regions as follows.

variables (f : α → β) (g : β → α)

def sb_aux : ℕ → set α

| 0 := univ \ (g '' univ)

| (n + 1) := g '' (f '' sb_aux n)

def sb_set := ⋃ n, sb_aux f g n

The definition sb_aux is an example of a recursive definition,

which we will explain in the next chapter.

It defines a sequence of sets

The definition sb_set corresponds to the set

\(A = \bigcup_{n \in \mathbb{N}} S_n\) in our proof sketch.

The function \(h\) described above is now defined as follows:

def sb_fun (x : α) : β := if x ∈ sb_set f g then f x else inv_fun g x

We will need the fact that our definition of \(g^{-1}\) is a

right inverse on the complement of \(A\),

which is to say, on the non-shaded regions of \(\alpha\).

This is so because the outermost ring, \(S_0\), is equal to

\(\alpha \setminus g(\beta)\), so the complement of \(A\) is

contained in \(g(\beta)\).

As a result, for every \(x\) in the complement of \(A\),

there is a \(y\) such that \(g(y) = x\).

(By the injectivity of \(g\), this \(y\) is unique,

but next theorem says only that inv_fun g x returns some y

such that g y = x.)

Step through the proof below, make sure you understand what is going on,

and fill in the remaining parts.

You will need to use inv_fun_eq at the end.

Notice that rewriting with sb_aux here replaces sb_aux f g 0

with the right-hand side of the corresponding defining equation.

theorem sb_right_inv {x : α} (hx : x ∉ sb_set f g) :

g (inv_fun g x) = x :=

begin

have : x ∈ g '' univ,

{ contrapose! hx,

rw [sb_set, mem_Union],

use [0],

rw [sb_aux, mem_diff],

sorry },

have : ∃ y, g y = x,

{ sorry },

sorry

end

We now turn to the proof that \(h\) is injective. Informally, the proof goes as follows. First, suppose \(h(x_1) = h(x_2)\). If \(x_1\) is in \(A\), then \(h(x_1) = f(x_1)\), and we can show that \(x_2\) is in \(A\) as follows. If it isn’t, then we have \(h(x_2) = g^{-1}(x_2)\). From \(f(x_1) = h(x_1) = h(x_2)\) we have \(g(f(x_1)) = x_2\). From the definition of \(A\), since \(x_1\) is in \(A\), \(x_2\) is in \(A\) as well, a contradiction. Hence, if \(x_1\) is in \(A\), so is \(x_2\), in which case we have \(f(x_1) = h(x_1) = h(x_2) = f(x_2)\). The injectivity of \(f\) then implies \(x_1 = x_2\). The symmetric argument shows that if \(x_2\) is in \(A\), then so is \(x_1\), which again implies \(x_1 = x_2\).

The only remaining possibility is that neither \(x_1\) nor \(x_2\) is in \(A\). In that case, we have \(g^{-1}(x_1) = h(x_1) = h(x_2) = g^{-1}(x_2)\). Applying \(g\) to both sides yields \(x_1 = x_2\).

Once again, we encourage you to step through the following proof

to see how the argument plays out in Lean.

See if you can finish off the proof using sb_right_inv.

theorem sb_injective (hf: injective f) (hg : injective g) :

injective (sb_fun f g) :=

begin

set A := sb_set f g with A_def,

set h := sb_fun f g with h_def,

intros x₁ x₂,

assume hxeq : h x₁ = h x₂,

show x₁ = x₂,

simp only [h_def, sb_fun, ←A_def] at hxeq,

by_cases xA : x₁ ∈ A ∨ x₂ ∈ A,

{ wlog x₁A : x₁ ∈ A generalizing x₁ x₂ hxeq xA,

{ symmetry, apply this hxeq.symm xA.swap (xA.resolve_left x₁A) },

have x₂A : x₂ ∈ A,

{ apply not_imp_self.mp,

assume x₂nA : x₂ ∉ A,

rw [if_pos x₁A, if_neg x₂nA] at hxeq,

rw [A_def, sb_set, mem_Union] at x₁A,

have x₂eq : x₂ = g (f x₁),

{ sorry },

rcases x₁A with ⟨n, hn⟩,

rw [A_def, sb_set, mem_Union],

use n + 1,

simp [sb_aux],

exact ⟨x₁, hn, x₂eq.symm⟩, },

sorry },

push_neg at xA,

sorry

end

The proof introduces some new tactics.

To start with, notice the set tactic, which introduces abbreviations

A and h for sb_set f g and sb_fun f g respectively.

We name the corresponding defining equations A_def and h_def.

The abbreviations are definitional, which is to say, Lean will sometimes

unfold them automatically when needed.

But not always; for example, when using rw, we generally need to

use A_def and h_def explicitly.

So the definitions bring a tradeoff: they can make expressions shorter

and more readable, but they sometimes require us to do more work.

A more interesting tactic is the wlog tactic, which encapsulates

the symmetry argument in the informal proof above.

We will not dwell on it now, but notice that it does exactly what we want.

If you hover over the tactic you can take a look at its documentation.

The argument for surjectivity is even easier. Given \(y\) in \(\beta\), we consider two cases, depending on whether \(g(y)\) is in \(A\). If it is, it can’t be in \(S_0\), the outermost ring, because by definition that is disjoint from the image of \(g\). Thus it is an element of \(S_{n+1}\) for some \(n\). This means that it is of the form \(g(f(x))\) for some \(x\) in \(S_n\). By the injectivity of \(g\), we have \(f(x) = y\). In the case where \(g(y)\) is in the complement of \(A\), we immediately have \(h(g(y))= y\), and we are done.

Once again, we encourage you to step through the proof and fill in

the missing parts.

The tactic cases n with n splits on the cases g y ∈ sb_aux f g 0

and g y ∈ sb_aux f g n.succ.

In both cases, calling the simplifier with simp [sb_aux]

applies the corresponding defining equation of sb_aux.

theorem sb_surjective (hf: injective f) (hg : injective g) :

surjective (sb_fun f g) :=

begin

set A := sb_set f g with A_def,

set h := sb_fun f g with h_def,

intro y,

by_cases gyA : g y ∈ A,

{ rw [A_def, sb_set, mem_Union] at gyA,

rcases gyA with ⟨n, hn⟩,

cases n with n,

{ simp [sb_aux] at hn,

contradiction },

simp [sb_aux] at hn,

rcases hn with ⟨x, xmem, hx⟩,

use x,

have : x ∈ A,

{ rw [A_def, sb_set, mem_Union],

exact ⟨n, xmem⟩ },

simp only [h_def, sb_fun, if_pos this],

exact hg hx },

sorry

end

We can now put it all together. The final statement is short and sweet,

and the proof uses the fact that bijective h unfolds to

injective h ∧ surjective h.

theorem schroeder_bernstein {f : α → β} {g : β → α}

(hf: injective f) (hg : injective g) :

∃ h : α → β, bijective h :=

⟨sb_fun f g, sb_injective f g hf hg, sb_surjective f g hf hg⟩